Intel's recent moves can be very frequent: publishing a variety of new algorithms at academic conferences, continuing to acquire Nervana and Modivius in the business world, and publishing the latest deep learning processor, Knights Mill, at the same time. The statement stated that the four Knights chips had 2.3 times faster computing power than the four GPUs. The Sword pointed to their old rival, NVIDIA, who had been killing each other for many years in the PC era. Even after NVIDIA’s resolute attitude and even a hint of revenge in the tone, Intel still defended its data in a public statement and stated that in the processor developed for deep learning last year, the use of GPUs was not yet available. To 3%.

What is the truth? After considering various factors, we can't really say clearly which of the latest deep learning chips from GPU and Intel is more suitable for deep learning and development. But one thing is certain: the GPU mentioned in Intel's statement is not the latest GPU Tesla P100 for this deep-learning chip, but NVIDIA's Maxwell architecture GPU, which was available as early as 18 months ago. 2.3 times this data should be watery. We can understand Intel's mood to promote its own products, but isn't it appropriate to use its next-generation products to compete with rivals that have been available for more than a year in previous-generation architecture products? Intel itself should understand this point, and similar means Intel is not the first time in the promotion of the use of (formerly Intel often claimed that their integrated graphics capabilities exceed the NVIDIA production of discrete graphics), but Intel The number of propaganda roads that claim to kill one enemy for a thousand self-defeats can be explained by the fact that Intel really places a great emphasis on the AI ​​and deep learning markets. The earlier and recent series of acquisitions have also been proved from the side. This point.

As early as in the PC era, two graphics processors were often compared. Image source Youtube

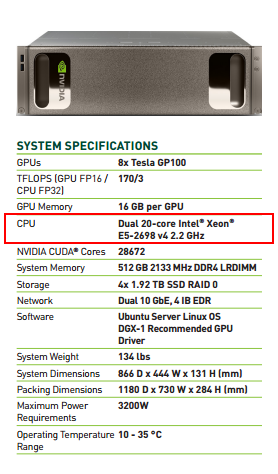

But why should you be so persistent?There are currently four active protagonists in the deep learning market: CPUs, GPUs, FPGAs, and dedicated processors . The use of FPGAs and dedicated processors is still in an exploratory stage. There are not many developers who actually use them, so the market for this area is Competition is mainly focused on the GPU and CPU, and it can even be said that it is focused on Intel and NVIDIA. But what each deep-learning developer knows well is that GPUs have a very big inherent advantage over CPUs in deep learning and development, especially algorithm training. Why does Intel insist on competing for this seemingly innate rival? What about the battlefield? It should be noted that although the GPU is very good, it can't work independently. At least nobody will do it because the GPU's features make it impossible to perform efficient logic operations. Even NVIDIA's latest deep learning supercomputer DGX-1 contains two Intel Xeon processors (well, NVIDIA intentionally weakened or even ignored this in many promotional documents). It seems that no matter how much NVIDIA's market is doing, there will always be a cup of Intel, why does Intel want to take this NVIDIA position so eagerly?

The CPU column of DGX-1 configuration table provided by NVIDIA's official website impressively reads Dual Intel Xeon E5-2698

Dreams and Crises Behind ProsperityTo clarify this issue, we must extend our perspective from artificial intelligence to see Intel's overall strategic layout. In fact this title is not very accurate. Because Intel's business seems to have been less prosperous. In recent years, Intel's core profitability CPU has also been attacked by three factors: the slowdown in the PC market and the outbreak of the mobile market, the failure to enter the move, Moore's Law gradually approaching the limit, almost ineffective. Although simple CPU sales can also make money, but only the development of higher-end (expensive) chips, the formation of the image of their own leaders, and then continue to sell them to more people in order to make more money, support The company's development. But at the same time, several factors have caused Intel to find that if he is still just keeping his own CPU business, he will soon face a huge crisis. In fact, in the past year, the decline in profits, layoffs, News has also been around Intel's side, lingering.

Intel was once one of the driving forces for the advancement of computer technology. It is clear that Intel hopes that this position can be maintained. Since last year, Intel has begun a slow but painful but firm transformation. He wanted to continue to be a world-leading company in the cloud computing, cloud storage, and the Internet of Things , and he invested a lot of attention in related fields and made many adjustments for it.

It can be ideally full, but reality is always very skinny. After a few years of high-profile adjustment, the Internet of Things, once highly hoped for, seems to have lost its way. There have been no real killer products. Many products and models that were once favored have disappeared and the entire market has not developed. Even for the future, which is Intel's most important cloud computing, when the market has basically ended the initial annihilation, Amazon, Microsoft, Google, Ali cloud, IBM, etc. have established a foothold in the market. The chance that Intel will come from behind is getting smaller and smaller.

In 2016, along with the birth of AlphaGo, artificial intelligence suddenly became hot. This made everyone's silent AI hardware competition suddenly rise to a new height. Watching old rival NVIDIA's eye-catching share of the demise of GPUs due to its focus on optimizing the parallel computing that GPUs are good at. How is Intel willing to let this market go? What's more, since the current deep learning requires very large amounts of data and computation, it can be one of the important service targets of cloud computing. And at present, in the deep learning market, FPGA has not yet become a climate, and giants like Google like to develop their own special chips. Therefore, it can be said that for deep learning chips, there is still a large market for personal developers and SMEs. There are currently only NVIDIA companies in this market that have become a climate, and Intel does not necessarily have a chance to want a strong entry. Even if Intel can't finally surpass NVIDIA, it can also form an advantage for newcomers and stay safe. Of course, for Intel, they certainly will not be willing to do second child, but must be running in the position of the boss.

Want to take the porcelain work, with or without diamond?In the end, does Intel have the opportunity to counterattack and regain the leadership of the PC era in the field of artificial intelligence and deep learning? We think that Intel is not really a chance at all, although it seems that NVIDIA is relying on the GPU performance and supporting software optimization that have been developed for years. But of these, there is indeed a possibility that Intel will turn over.

Bet FPGA

In June of last year, Intel acquired Altera, the famous FPGA manufacturer, with an unprecedented $16.7 billion in huge profits. At that time, the industry's interpretation of Intel's move was mainly focused on the server market and the layout of the Internet of Things market. Intel’s own interpretation of the acquisition was not Explicitly mention machine learning. But now, it seems that the acquisition may have a considerable degree because Intel realized its potential in the field of artificial intelligence.

Regardless of how it was at the moment, at least for now, Intel is definitely fully aware of the value of this acquisition in artificial intelligence. The potential of FPGAs for GPUs is that their computing speed is comparable to that of GPUs, but they have a significant impact on GPUs in terms of cost and power consumption. Advantage. Of course, there are also disadvantages. We will mention this last but the potential of FPGA is very obvious. As a commodity that wants to be brought to market, FPGAs need to overcome the most and the easiest problem to overcome is popularity. Most PCs are equipped with either high-end or low-end independent GPUs, and small and medium-sized nerves for individuals. In terms of network development and training, their performance is basically sufficient. The FPGA is not something that can be found in the computer, but more common in various refrigerators, televisions and other electrical equipment and laboratories, so to get a piece of FPGA that can be used to develop deep learning is actually quite troublesome. As you can imagine, this is one of the issues that Intel will work hard to solve.

Image source, EETimes

Integrated Graphics - Undeveloped Virgin Land

Some people may not realize that Intel actually has strong capabilities in the design and manufacture of graphics cards. It is even the world’s largest GPU maker because many low-end computers and ultrabooks on the market are not equipped with a single display. However, almost every Intel CPU has an integrated graphics card. Intel originally meant that this set was used to help with daily graphics operations, so that users who do not need to run high-performance programs can get one at a very low cost. Used computer. However, in recent years, the performance of integrated graphics has become stronger and stronger, and it has even reached the level that can run many medium-sized games. The theoretical performance of Iris Pro Graphics 6200 has even reached the level of independence alone. However, at present, no one will use the set display to do even a small-scale deep study, because it is still slow, but their computing power is obviously not much different. Why is the speed so poor? The next thing to talk about is what we want to say:

software! software! software!

Nowadays, there is a strange phenomenon in the IT field. Many people say that they have excess performance. While watching mobile phones and computers in their hands getting more and more cards, in fact, this is the problem of software optimization. With the same computing power, the software-optimized one can get much higher performance. The GPU has been nurtured by NVIDIA for so many years, and already has a very complete set of deep learning software support. NVIDIA's GPUs have excellent optimization and compatibility with mainstream deep learning platforms such as Caffe, Theano, and Torch, as well as their own CuDA. The optimization of FPGA is much less, so the difficulty of FPGA-based development is actually much higher than that of GPU, which is also a defect of another FPGA mentioned earlier.

Intel is not a software company. When it comes to Intel and software, most people think of it as the driver for the various hardware it manufactures. But AI has never been a simple matter. If you want to play in this area for a long time, only the hardware is inevitable. In fact, Intel has begun to show its own efforts in software and algorithms. Last week, Intel China announced its own innovation in deep learning algorithm: "dynamic surgery" algorithm. This shows that Intel has begun to work hard on algorithm theory. The ability to innovate in this area shows that Intel has a deep understanding of its algorithms and believes that the next step is to use these understandings in the future optimization of deep learning chips.

Intel's confidenceIn fact, Intel is still trying to tell you one thing when it is constantly facing NVIDIA:

In fact, GPU is not so important for deep learning.

Many people, including myself when I first heard this point of view, may have a look of WTF expression. But from a certain point of view, perhaps what Intel is saying makes sense. Indeed, when we talk about hardware related to artificial intelligence, more thought will come from tall server racks and flashing lights, at least the TitanX lined up on the other side and the Xeon CPU on the other side of the board. , but people who are not artificial intelligence professionals are actually very few people realize that the development and application of artificial intelligence is actually divided into several stages, of which only "algorithm training" is a stage that has a genuine demand for strong computing capabilities. From data screening, to algorithm development, effectiveness testing, and even the application of the final algorithm does not require too much computing power.

Of course, in a good project, algorithm training should be carried out throughout the entire application process, but this also means that having superior computing power is not the only feature required for a chip used in the field of artificial intelligence.

This is Intel's biggest source of emphases, and its understanding of artificial intelligence is in no way weaker than NVIDIA, and clearly knows what they are good at and can take advantage of. Let's go back and take a closer look at the areas that Intel CEO Ke Zaiqi mentioned in the blog that Intel intends to focus on after the transition. We will find two of them are particularly critical:

One: Different forms of “things†in the Internet of Things: Almost all devices in the Internet of Things have almost two distinct features: they are small and they are battery-powered. For these devices, the GPU's size and power consumption are obviously too large, and FPGAs and dedicated processing chips are suitable for these devices. This is Intel's first chance.

For IoT devices, such a large volume of the motherboard is big, but it is clear that even if such a board is impossible to plug a GPU, let alone consume power.

Two: Connectivity, mentioned in a good project, the training of the algorithm should be throughout the entire application process, so that consumers can always provide the best experience of the service. However, if all algorithms are to be concentrated on local training, not only will they face the problem of computational bottlenecks, but they will also face the problem of too little data collected from a single user. We do not consider the AI ​​based on small samples of unsupervised learning that may appear long time later (which is already almost like people already). Under the current development of AI, it is obviously more rational and effective to concentrate all data on the cloud for calculation. way of doing. And this puts a very high demand on communications, and Intel happens to have a lot of accumulation in this area! Although Intel’s communications department has suffered losses year after year, in the current situation, it unexpectedly has new value and potential.

Both of the above businesses are areas that NVIDIA has never been able to enter, and they are also currently required by AI. Intel has discovered these areas. Although this does not mean that it can succeed in these areas and succeed, it does give Intel confidence in NVIDIA and a competitor. In addition to direct competition, it is also to tell everyone: We have never been NVIDIA in the field of artificial intelligence, walking!

People who have the courage to face change will not be too bad luckIntel does face unprecedented challenges, but this is not without opportunities. Fortunately, Intel saw the opportunity and began to work hard to catch up with the pioneers in these areas. The advent of the mobile wave has caused many traditional Internet giants to face a difficult situation. However, most of them who have undergone a transition are actually living today, and some even have a good life. Intel is also one of them. It used to be the leader in the computer industry. Now, Intel CEO Ke Zaiqi also expressed the hope that Intel can continue to use the value of Moore's Law to lead the industry forward. The current situation is not optimistic, but once it has found the right path, Intel may still have the opportunity to completely reverse the situation.

Cassava Dregs Separator,Cassava Processing Machine,Cassava Processing Equipment,Cassava Milling Machine

Hunan Furui Mechanical and Electrical Equipment Manufacturing Co., Ltd. , https://www.thresher.nl