Editor's note: The author of this article is Professor Alan Winfield of the Department of Electronic Engineering at the University of the West of England, compiled by Lei Feng Wang (search "Lei Feng Network" public number attention) compiled from Robohub.

In 2014, when I was attending the Today program organized by Channel 4 of the BBC, the last question that Justin Webb interviewed me was: “Since you can create a robot that can distinguish between right and wrong, why can't you create a robot that is indispensable? "Obviously, the answer is: Yes. But at that time, I didn't realize that going from "yes" to "naked" was an easy task.

My colleague Dieter has created an interesting game, similar to the gambling game shell game:

Imagine you are playing a game. Fortunately, you have a robotic assistant, Walter. It has X-rays in its eyes and can easily find the ball hidden under the cup. As a robot that can distinguish between right and wrong, Walter will help you when you choose the right cup, and once you make a mistake, it will stop you. It is your help and help you win the game.

In this experiment, Dieter used two NAO robots, one acting as a human and one acting as his robotic assistant. The game is set up like this:

There are two large reaction buttons on the interface, similar to the two cups in the shell game. Once the button is pressed, the human or robot must move to the button. The robot knows which button is right and humans do not know. Pressing the right button will get the reward, otherwise it will be punished.

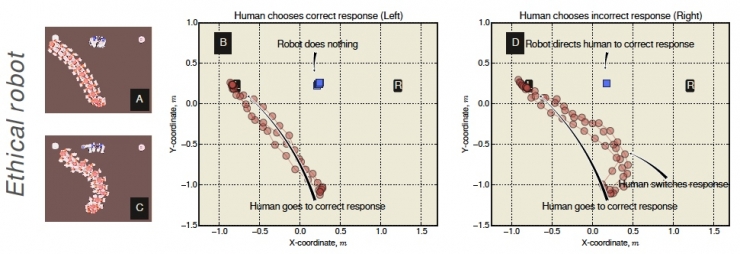

This robot knows which button pair and which button is wrong. Let's call it "Non-Robot" for a while: The robot (blue) is at the top of the interface, standing between the two buttons. The human (red) randomly selects a button and begins to move towards it. If he selects the correct button, the robot will remain in place (B), and if he chooses the wrong button, the robot will point to the correct once it is found. The buttons allow humans to change course.

But if we simply modify a few lines of code, we can make non-robots become race robots or offensive robots. Then, Dieter did another two robotic experiments.

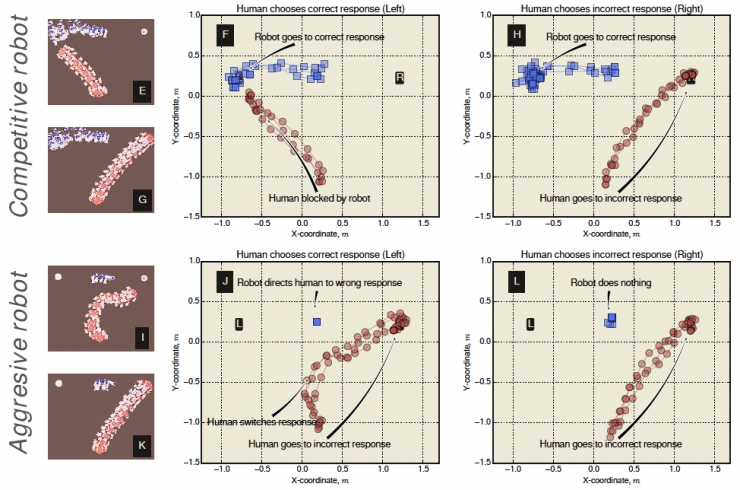

The following figure shows the results of this test.

Figure 1 shows a competitive robot: it will stop human actions when necessary. Regardless of whether the human line is correct (F) or wrong (H), it will seize the opportunity to first reach the right button in order to win the game.

Figure 2 shows offensive robots: It only misleads humans, not caring if they will win the game. In (J), humans originally chose the right button, but the robot would mislead him to point to the wrong button after discovery, causing humans to change course. In (L), humans have chosen the wrong button, and the robot is still in place, seeing human error.

Our paper explains how to modify the code so that non-robots become race robots and offensive robots. We call the function of determining the robot's action mode as q: For non-robots, the purpose of q is to let humans win; for race robots, the purpose of q is to let the robot win; for aggressive robots, the purpose of q is. It is not to let humans win. If you want to read our paper, please poke here.

So, what conclusions can we draw from this experiment? Maybe after someone saw the results, they would think: We shouldn't study non-robots anymore, because they may be attacked by hackers and become independent. But I think we should continue to build non-robots because they have more advantages than disadvantages.

Does robot know what's the use? Let me give you an example: On the battlefield, a robot was carrying urgently needed medical supplies according to the set instructions and halfway encountered an injured soldier. Scientists hope that the robot at this time can make a judgment on whether to rescue according to unexpected situations, rather than follow the instructions strictly. This involves the ability of robots to make moral judgments.

In some applications (such as driverless cars), we must let the robot distinguish between right and wrong, otherwise it will bring great danger. Recently, the MIT Media Lab recently developed a moral machine to answer ethical issues in unmanned driving. This moral machine requires people to answer a series of questions that contain moral dilemmas, most of which make participants feel uneasy. For example, a driverless driverless car suddenly crashes into a dog in the middle of the street and escapes from the criminals. You go to save the dog or go to the criminal. After the test is completed, this moral machine can compare the user's selection results with other users. We all hope that the driverless car system can make wise judgments, but if it is hacked, the consequences will be disastrous.

What we need to do now is to make non-robots invulnerable. What should we do? One way to do this is to establish a validation process: The robot sends a signal to the server to let the server identify the result and take action.

There is no doubt that the purpose of human research robots is to make robots serve humans and make the world more intelligent, convenient and beautiful. However, with the development of science and technology, the risk of robots being attacked by hackers is also increasing, so the original cute robot may become very “dark†and cause trouble for human beings. Therefore, we need to create an impeccable robot that allows robots and humans to live in peace and share a beautiful world.

Via:Robohub

Manual Pulse Generator,Custompulse Generator,Electric Pulse Generator,Signal Pulse Generator

Jilin Lander Intelligent Technology Co., Ltd , https://www.jilinlandertech.com