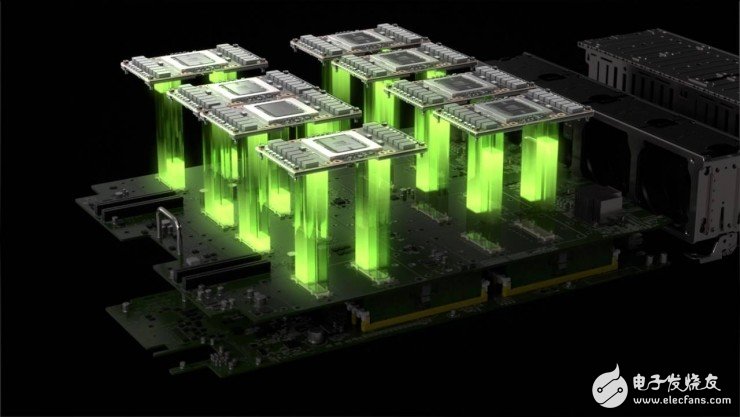

At this year's GTC in Silicon Valley, NVIDIA released the Deep Learning Supercomputer DGX-1. Huang Renxun called it "a data center that fits into the chassis."

The DGX-1 includes eight Pascal-based Tesla P100 accelerators and four 1.92TB SSDs, which use NVLink technology that is 5-12 times faster than traditional PCIe to connect data between CPU and GPU and GPU. In deep learning, it is 75 times faster than a normal Xeon E5 2697 v3 dual CPU server, and the overall performance is equivalent to 250 ordinary x86 servers. The price of a single DGX-1 is $129,000.

After GTC, Huang Renxun personally handed the first DGX-1 to Elon Musk's artificial intelligence project OpenAI. NVIDIA will also give priority to the first batch of DGX-1 to research institutions that have made outstanding contributions to artificial intelligence in recent years. This list includes Stanford, UC Berkeley, CMU, MIT, Chinese University of Hong Kong, etc. In mainland China, the public information is that Hikvision signed the first domestic single DGX-1 through Dawning in July; NVIDIA told us that DGX-1 has ten customers in China.

At HPC China 2016, which just ended in October, we talked to senior executives at NVIDIA about their views on high-performance computing and why they built this supercomputer.

Most of the next generation of programs will be written by machineMarc Hamilton, NVIDIA's vice president of solutions and engineering architecture, expressed this point of view at HPC China 2016. AI will spawn a new computing model. Most of the future programs will not be written by people, but through deep learning. The network is written.

He gave an example of programs written in the past, such as address books or the issuance of wages, which are unconventional figures. Today there are a lot of more complex data, such as images, sounds, and videos. Even if 1.3 billion Chinese are turned into code farmers, it is impossible to compile enough software to handle the large amount of data generated in a day. So most programs are written by deep neural networks, and NVIDIA believes that most deep neural networks will run on the GPU.

NVIDIA has two cases: In Shanghai, they have a partner in the biomedical industry to conduct a review and review of cancers through deep learning of MRI and CT images. The other area that is going faster in the country is security, such as comparing photos of suspects or looking for specific objects in the video. Typical partners in this area, such as Hikvision, the DGX-1 purchased by the latter is also a deep learning study for video surveillance.

DGX-1 is a foolish designThe DGX-1 is designed to go back to GTC in 2015, when NVIDIA announced the latest generation of Pascal architecture, which will increase the speed of some key deep learning applications by more than 10 times. But this new architecture also brings new problems: development/researchers may have to spend weeks or even months configuring these GPUs. So a few months later, Huang Renxun made a request internally: I hope that before the second year of GTC, NVIDIA's engineering department will build a server based on Pascal architecture, so that research institutions and companies can press the chassis button. Can use 8 GPUs for deep learning.

The DGX-1 we saw today is not as simple as 8 GPUs pinched together. Marc Hamilton tells us that DGX-1 also includes the integration of three types of software and services.

The first is support for all deep learning frameworks. Such as Caffe, TensorFlow, CNTK. The .DGX-1 is optimized for the popular deep learning framework.

The second type is the underlying library, called cuDNN, which can be understood as CUDA that incorporates the Deep Neural Network.

The third category is DGX's cloud service, which is equivalent to making a mirror image of the DGX server from the cloud. Any company, they may not know how to manage deep learning system software, but know how to manage a DGX-1 server in the cloud.

At present, the biggest challenge for NVIDIA is how to quickly popularize deep learning. Shen Wei, general manager of the China Enterprise Division, said that deep learning is a unique market. NVIDIA's own DGX-1 is a new background. try. Marc Hamilton tells us that to achieve 150 petaflop floating-point computing performance, if you are based on multiple GPUs, you need 3400 servers, and if you use a traditional x86 solution, you need 100,000 servers. For programmers, the choice of maintaining these two orders of magnitude is obvious.

KNB2-63 Miniature Circuit Breaker

KNB2-63 Mini Circuit breakers, also named as the air switch which have a short for arc extinguishing device. It is a switch role, and also is a automatic protection of low-voltage electrical distribution. Its role is equivalent to the combination of switch. Fuse. Thermal Relay and other electrical components. It mainly used for short circuit and overload protection. Generally, According to the poles, mini Circuit breaker can be divided into 1P , 1P+N , 2P, 3P and 4P.

KNB2-63 Miniature Circuit Breaker,Electronics Miniature Circuits Breaker,Automatic Miniature Circuit Breaker,Mini Circuit Breaker

Wenzhou Korlen Electric Appliances Co., Ltd. , https://www.zjmoldedcasecircuitbreaker.com